- Two-thirds of nuclear medicine technologists worked in hospitals.

- Nuclear medicine technology programs range in length from 1 to 4 years and lead to a certificate, an associate degree, or a bachelor’s degree.

- Faster-than-average job growth will arise from an increase in the number of middle-aged and elderly persons, who are the primary users of diagnostic and treatment procedures.

- The number of job openings each year will be relatively low because the occupation is small; technologists who also are trained in other diagnostic methods, such as radiologic technology or diagnostic medical sonography, will have the best prospects.

Wednesday, September 30, 2009

Nuclear Medicine Technologists

Tuesday, September 29, 2009

New Weather Technology Helps NOAA Storms Lab Develop Pilot Program

When a hurricane or severe storm hits North Carolina, South Carolina or Virginia - as Hurricane Floyd did in 1999 - weather forecasters now anticipate delivering more accurate flood and flash flood warnings.

Scientists are testing new advanced weather technology in a pilot program from the National Oceanic & Atmospheric Administration's (NOAA) National Severe Storms Laboratory (NSSL), NOAA's National Sea Grant College Program and North Carolina and South Carolina Sea Grant state programs.

New software that utilizes Doppler radar data, satellite imagery and other information tools will monitor rainfall in watershed basins as small as one square kilometer.

National Weather Service (NWS) forecasters can use the data to issue more precise and accurate flood and flash flood warnings. Emergency management officials, utility companies and others can use data to better prepare for flood events.

With new technology, researchers hope to mitigate losses such as the tremendous damage caused by Hurricane Floyd. In North Carolina, for example, the statistics were staggering - 52 deaths, 7,000 homes destroyed, 17,000 uninhabitable homes and 57,000 homes damaged.

"This will help save lives and protect property," said North Carolina Sea Grant marine educator Lundie Spence, who was one of several Sea Grant representatives who visited NSSL last year in an effort to develop joint projects within the two NOAA agencies.

"If we can assist the local NWS Forecast Office with implementation of new technology that provides additional details about a severe storm system, specific amounts of rainfall and the type of precipitation, people will have more opportunity to prepare to evacuate areas," Spence added.

The project has two phases: collecting regional radar data in "real time" at a single location at Wilmington, N.C., and creating Web-based flash flood guidance software using real time radar data.

The regional or multi-state images, which will be available by late September, will combine raw data from several National Weather Service Doppler radars in coastal North and South Carolina and coastal southern Virginia.

Currently, each NWS office can only get Doppler radar data from one or two radars at a time," said Kevin Kelleher, deputy director of the National Severe Storms Lab in Norman, Okla.

"With this new technology, we will be able to provide regional, multi-radar images and forecast products using realtime Doppler data to NWS forecasters and emergency managers extending from coastal Georgia to Washington, D.C.," Kelleher added.

"The ability to receive multi-sensor estimates of accumulated rainfall for individual river basins should really be helpful in managing flood situations."

By December, the second phase of the project will be nearly complete. Data will be available on the World Wide Web to a variety of users, including weather forecasters, emergency management officials, mariners, scientists and commercial users in the power and agricultural sectors. The data will include information on the amount of rainfall that has fallen in each river basin and an indication of the likelihood that flooding will occur.

"Web-based data will include color maps that will help emergency management officials more quickly and accurately identify the areas at greatest risk for flood," said South Carolina Sea Grant extension program leader Robert Bacon. "For example, they can more effectively target areas for evacuation and position their response and recovery resources to assist flood victims."

The National Sea Grant Program is a university-based program that promotes the wise use and stewardship of coastal and marine resources through research, outreach and education.

Thursday, September 24, 2009

Ford's New Car in India

Ford has pronounced that it is to make a latest car in India, as part of its $500m (£304m) investment plan in the country.

Manufacturing will begin on the Ford Figo at the carmaker's plant in Chennai in the first quarter of 2010.

Ford has described the Figo - which is Italian slang for "cool" - as a "game changer" and said it was confident it would be popular with Indian consumers.

The Figo will compete in India's small car segment, which makes up about 70% of the new vehicle market.

The car will initially only be for sale in India, but there are plans to export it to other countries, Ford said.

In April, the world's cheapest car, the Tata Nano, went on sale in India. The Nano is 10 feet (3 metres) long.

"[The Figo] reflects our commitment to compete with great products in all segments of this car market," said Ford president and chief executive Alan Mulally.

Wednesday, September 23, 2009

Fuel Cell Vehicles

Although they are not expected to reach the mass market before 2010, fuel cell vehicles (FCVs) may someday revolutionize on-road transportation.

This emerging technology has the potential to significantly reduce energy use and harmful emissions, as well as our dependence on foreign oil. FCVs will have other benefits as well.

A Radical Departure

FCVs represent a radical departure from vehicles with conventional internal combustion engines. Like battery-electric vehicles, FCVs are propelled by electric motors. But while battery electric vehicles use electricity from an external source (and store it in a battery), FCVs create their own electricity. Fuel cells onboard the vehicle create electricity through a chemical process using hydrogen fuel and oxygen from the air.

FCVs can be fueled with pure hydrogen gas stored onboard in high-pressure tanks. They also can be fueled with hydrogen-rich fuels; such as methanol, natural gas, or even gasoline; but these fuels must first be converted into hydrogen gas by an onboard device called a "reformer."

FCVs fueled with pure hydrogen emit no pollutants; only water and heat; while those using hydrogen-rich fuels and a reformer produce only small amounts of air pollutants. In addition, FCVs can be twice as efficient as similarly sized conventional vehicles and may also incorporate other advanced technologies to increase efficiency.

Meeting Challenges Together

Before FCVs make it to your local auto dealer, significant research and development is required to reduce cost and improve performance. We must also find effective and efficient ways to produce and store hydrogen and other fuels.

Automakers, fuel cell developers, component suppliers, government agencies, and others are working hard to accelerate the introduction of FCVs. Partnerships such as the DOE-led FreedomCAR initiative and the California Fuel Cell Partnership have been formed to encourage private companies and government agencies to work together to move these vehicles toward commercialization.

FreedomCAR

FreedomCAR is a new cooperative research effort between the DOE and the U.S. Council for Automotive Research (Ford, General Motors, and DaimlerChrysler) formed to promote research into advanced automotive technologies, such as FCVs, that may dramatically reduce oil consumption and environmental impacts. FreedomCAR's goal is the development of cars and trucks that are:

- Cheaper to operate

- Pollution-free

- Competitively priced

- Free from imported oil

California Fuel Cell Partnership (CaFCP)

Tuesday, September 22, 2009

The New Music Technology

Teenagers from across Wiltshire will be will be finding out more about using technology to make their own music over the coming weeks as they take part in week-long courses run as part of an exciting new music project.

MusicXpress is a Music Technology project which aims to give young people from across Wiltshire the chance to DJ and build skills in technology, often for the first time. Funded by Youth Music, The Paul Hamlyn Foundation and the Vodafone UK Foundation, it is run by Wiltshire Youth Arts Partnership (WYAP) and the Wiltshire Music Centre, in partnership with Wiltshire Youth Development Service, Salisbury College and St Laurence School. The 18 month project will offer courses and outreach sessions in Youth Centres in all areas of the county.

Beginning in July, holiday courses take place during school holidays at the Wiltshire Music Centre and Salisbury College. Young people will be able to develop skills in DJing and Music Technology, creating new tracks and lyrics to be burned onto CD. There will also be the opportunity for school leavers or college students to work as peer mentors alongside the music leaders, gaining further training in technology. The Bradford on Avon course runs August 14-18, and the Salisbury course runs from August 29 - September 1. Courses cost just £10 per participant for the week, and help can be offered with travel.

Outreach sessions will be held around the county between now and October 07. Sessions have recently taken place in Malmesbury, Tidworth, Corsham and Durrington Youth Development Centres, with more venues planned for the autumn term.

There are still places available on both the Bradford and Salisbury holiday courses, and anyone interested in booking should contact WYAP

T: 01249 716681.

MusicXpress are actively seeking new venues to host sessions in Autumn 2006 and 2007, and interested youth clubs or youth projects should contact WYAP.

Following a successful pilot project in 2004/05, 'MusicXpress 2' has been made possible by grants totalling £73,500, from Youth Music, The Paul Hamlyn Foundation and the Vodafone UK Foundation.

Monday, September 21, 2009

Your Mileage

EPA has improved its methods for estimating fuel economy, but your mileage will still vary.

EPA tests are designed reflect "typical" driving conditions and driver behavior, but several factors can affect MPG significantly:

- How & Where You Drive

- Vehicle Condition & Maintenance

- Fuel Variations

- Vehicle Variations

- Engine Break-In

Therefore, the EPA ratings are a useful tool for comparing the fuel economies of different vehicles but may not accurately predict the average MPG you will get.

To find out what you can do to improve the fuel economy of your car, see Driving More Efficiently and Keeping Your Car in Shape.

Friday, September 18, 2009

The New Face of War

Nicholas Negroponte, head of MIT’s Media Lab, observed that the information age is fast replacing atoms with bits; movies on film with packets on the Internet; print media with digital media; and wires with digital radio waves.

Negroponte does not apply the bits-for-atoms principle to warfare, but Bruce Berkowitz, in The New Face of War, does. According to Berkowitz, a senior analyst at RAND and a former intelligence officer, future wars will not be won by having more atoms (troops, weapons, territory) than an opponent, but by having more bits . . . of information.

Berkowitz argues that atoms that used to be big winners will become big losers to information technology. Reconnaissance sensors will quickly find massed troops, enabling adversaries to zap those troops with precision-guided weapons. Fortifications will tie armies down to fixed locations, making them sitting ducks for smart bombs. Cheap cyber weapons (e.g., computer viruses) will neutralize expensive kinetic weapons (e.g., missile defenses).

Berkowitz sums up the growing dominance of bits over atoms: “The ability to collect, communicate, process, and protect information is the most important factor defining military power.” The key word here is: “the most important factor.” The New Face of War gives many historical examples of information superiority proving to be an important factor in defining military power, such as the allies breaking German and Japanese codes during World War II and Union forces employing disinformation to mislead Confederates in the Civil War. But the digital revolution has transformed information from supporting actor to leading lady.

Evidence that this revolution has already occurred abounds. In the 1990 Gulf War, smart weapons turned Saddam’s strength (concentrated troops and tanks) into liabilities. More recently, al-Qa’ida used the global telecommunications net to coordinate successful attacks by small, stealthy groups who triumphed through information superiority (knowing more about their targets than their targets knew about them).

Perhaps the biggest effect of information technology on warfare will be the elimination of the concept of a front, according to Berkowitz. If fronts persist at all, they will live in cyberspace where info-warriors battle not over turf, but over control of routers, operating systems, and firewalls. Even so, The New Face of War argues that there will be no electronic “Pearl Harbors” on the emerging battlefield of bits because disabling a nation’s information technology (IT) infrastructure will be too hard even for the most sophisticated cyber-warriors. Well-timed, pinpoint computer network attacks will be much more likely.

Dr. Berkowitz’s vision of the future is probably right in many respects and off target in a few others. But, regardless of its accuracy, his book surfaces critical questions for the Intelligence Community.

First, the things he gets right and what these mean for intelligence: Information technology has changed warfare not by degree, but in kind, so that victory will increasingly go to combatants who maneuver bits faster than their adversaries. Thus, intelligence services will need an increasing proportion of tech-savvy talent to track, target, and defend against adversaries’ IT capabilities. As countries like China, India, Pakistan, and Russia grow their IT talent base—and IT market share—faster than the United States, the strengths of their intelligence services will likely increase relative to those of US intelligence.

Because cyber-wars will be played out on landscapes of commercial IT, intelligence agencies will need new alliances with the private sector, akin to existing relationships between nation states. And the Intelligence Community will have to confront knotty problems such as: performing intelligence preparation of cyber battlefields; assessing capabilities and intentions of adversaries whose info-weapons and defenses are invisible; deciding whether there is any distinction between cyber defense and cyber intelligence; and determining who in the national security establishment should perform functions that straddle the offensive, defensive, and intelligence missions of the uniformed services and intelligence agencies.

The growing importance of IT in warfare will also change the way intelligence agencies support atom-based conflicts. New technology will collect real-time intelligence for fast-changing tactical engagements, but the mainstay product of the Intelligence Community, serialized reports, is far too slow for disseminating these high-tech indications and warnings. Faster means of delivering—and protecting—raw collection must be devised, so that real-time intelligence can be sent directly to shooters without detouring through multiple echelons of military intelligence analysts. Also, remote sensors designed to report on the capabilities, intentions, and activities of armed forces, will not find lone terrorists. Radically new sensing networks that blanket the globe will be needed to collect pinpoint intelligence on individual targets.

The distinction between intelligence and tactical operations data (such as contact reports and significant activity reports) will blur as national intelligence means are focused on real-time tactical missions. All-source analysts will need to add tactical operations reporting to their diet of HUMINT, SIGINT, IMINT, OSINT and MASINT.

Now, the areas in which The New Face of War misses the mark: First, military power in the future will not flow solely from precision zapping and deployment of small, networked forces. Some missions, such as peacekeeping, will always demand the highly visible presence of large forces. And if numbers do not matter anymore, as Berkowitz suggests, why worry about North Korea’s million-plus army? The bottom line is that as intelligence agencies get better at tracking and collecting on individuals terrorists, they will still need robust targeting and force protection capabilities against large conventional forces.

The evolution of media, with which we began this discussion, teaches powerful lessons about the folly of too quickly abandoning the old for the new. The printing press did not abolish handwriting; motion pictures did not kill live theater; television did not doom radio; and the Internet did not extinguish magazines. For each of these transitions from old to new, there were plenty of pundits who prophesized the demise of legacy forms of communication at the hands of new information technology.

Berkowitz is in good company, though. The US Air Force was so sure that close air combat was obsolete, that the first F-4 fighters did not have cannons. They relied instead on high-tech air-to-air missiles—until the F-4s fell victim to the cannons of North Vietnamese MIGs in “obsolete” air combat. Low-tech weapons on the F-4 ultimately did not yield to high-tech missiles; they simply moved over and made room for them. And today’s newest generation of fighters still retain cannons.

There is an important lesson here for intelligence agencies: As novel collection, analytic, and dissemination technologies are acquired, traditional tradecraft should be retained to cope with traditional adversaries and tactical situations. Just as missiles did not replace cannons, legacy tradecraft will need to be preserved but continuously improved to track changes in conventional warfare. For example, imaging satellites will always be essential, but they will have to steadily increase resolution and dwell time. Ditto for traditional SIGINT and MASINT collection systems.

I also disagree with Berkowitz’s contention that there can be no electronic Pearl Harbors. The inexorable migration to the Internet of such diverse functions as telephony, power plant control, commercial data networks, and defense communications has already created a “one-stop-shop” target for info-warriors. In essence, industrialized nations have done in cyberspace what Berkowitz says is so perilous in physical space: namely, concentrated all their eggs in one basket. Intelligence agencies should not, therefore, abandon the hope of severely crippling a cyber enemy, nor should they assume a cyber enemy could not return the favor.

Despite these shortcomings, The New Face of War is an eminently enjoyable read, jam-packed with fascinating historical examples of information technology at war. Dr. Berkowitz’s experience as an intelligence officer comes through clearly in his book, providing important context and relevance for intelligence collectors, analysts, and disseminators.

Put another way, whether consumed as atoms or bits, The New Face of War is a must read for all intelligence professionals.

Thursday, September 17, 2009

Wednesday, September 16, 2009

Water Energy Technology Team

Extracting raw energy resources, cooling power plants, and powering the generators for hydroelectric energy all use water.

People also use natural waterways to dilute and disperse pollutants.

Extracting, purifying, pumping and transporting drinking water, heating water for domestic and other use, and treating and disposing of wastewater are all activities that use energy. In agricultural and industrial settings, both resources are essential to sustaining economic output.

To minimize resource depletion and maximize human and environmental benefits, it is essential to understand the interdependence of water and energy use.

Tuesday, September 15, 2009

Super-Hard and Slick Coatings Win R&D 100 Award

Argonne National Laboratory (ANL) began researching super slick coatings in 2005. The awards recognize the top scientific and technological innovations of the past year. ANL scientists have won 105 R&D 100 awards since they were first introduced in 1964. The Vehicle Technologies Program has received 20 awards for various technologies created in collaboration with five different laboratories, universities and industry.

Super-hard and slick coatings can improve the performance of all kinds of moving mechanical systems, including engines. Friction, wear, and lubrication strongly affect the energy efficiency, durability, and environmental compatibility of such systems. As an example, frictional losses in an engine may account for 10-20 percent of the total fuel energy (depending on the engine size, type, driving conditions, and weather, for example). The amount of emissions produced by these engines is also strongly related to their fuel economy. In general, the higher the fuel economy, the lower the emissions. In fact, achieving higher fuel economy and lower emissions is one of the most important goals for all industrialized nations. SSC with its self-lubricating and low-friction nature can certainly help to increase the fuel economy of future engines.

The SSC is a designer coating: The ingredients used to make it were predicted by a crystal-chemical model proposed by its developers. In laboratory and engine tests, SSC reduced friction by 80 percent compared to uncoated steel and virtually eliminated wear under severe boundary-lubricated sliding regimes.

Tribology is the science and technology of interacting surfaces in relative motion. Tribological materials in future engine systems will be subjected to much higher thermal and mechanical loads and will be supplied with less effective but more environmentally sound lubricants in much reduced quantities. Energy saving and environmental benefits resulting from the uses of SSC are real. As of 2009, the United States consumes nearly 13 million barrels of oil per day to power motored vehicles. The total energy losses resulting from friction in these vehicles are estimated to account for about 15 percent of the fuel's energy. Therefore, if it can be reduced by one third by advanced friction control technologies like SSC, billions of dollars could be saved every year.

Because SSC allows for less friction in engines, it helps manufacturers produce more fuel-efficient vehicles. This in turn helps Americans save money at the gas pump, and boosts the American auto industry by helping manufacturers produce more appealing cars because of increased fuel efficiency.

Funding for this research was provided by the DOE's Office of Energy Efficiency and Renewable Energy Vehicle Technologies Program. The SSC was jointly developed by a team from Argonne and Istanbul Technical University. Galleon International Co., Brighton, MI, and Hauzer Technocoating, The Netherlands licensed the patents.

Monday, September 14, 2009

Nuclear Medicine Technology

Under supervision, prepares, measures, administers radiopharmaceuticals in diagnostic and

therapeutic studies, and performs nuclear medicine procedures on patients of all ages utilizing

various types of imaging equipment.

Nuclear Medicine Technologists perform a wide variety of exacting technical tests and procedures

using radioactive materials and the operation of radiation detection devices. Incumbents receive

supervision from a technical supervisor, but receive direction from a physician who establishes

protocols, reviews procedures, and interprets results.

• Perform a full range of nuclear medicine prescribed imaging techniques such as brain, liver,

kidney scans and thyroid activity measurements used in medical diagnosis and evaluation;

• Calibrate and draw up radiopharmaceutical materials and administer it by mouth, injection, or

other means to patients;

• Formulate radiopharmaceutical materials from pre-prepared kits;

• Evaluate recorded images for technical quality;

• Operate cameras that detect and map the radioactive drug in a patient’s body;

• Calculate data to include results of patient studies and radioactive decay;

• Process computerized image data to include function curves and reconstructed SPECT images

and accurately transfer images to the Picture Archive Communication System (PACS);

• Prepare and maintain records concerning radiopharmaceutical receiving, dispensing, and

disposal activities;

• Administer thyroid therapy to patients that have undergone prior clinical and nuclear medicine diagnosis;

• Ensure decay of pharmaceutical and calculate for correct dosage using dose calibrator;

• Observe patient dosage to ensure proper treatment under direct supervision of a radiologist;

• Monitor therapy patient’s room for radioactivity and review room upon discharge;

• Assume care for physical and psychological needs of patients for all ages during examination;

• Schedule patients for exams and explain test procedures, as necessary;

• Initiate life support measures for patient, if necessary;

• Formulate radiopharmaceutical materials from pre-prepared kits;

• Assist in administering therapeutic procedures;

• Research patient chart for evaluation and/or procedure planning;

• Draw blood for diagnostic nuclear medicine tests;

• Calibrate equipment and instruments to maintain quality control standards;

• Calculate the strength of radioactive materials;

• Monitor work areas for radioactivity;

• Clean/store contaminated instruments and gloves;

• Instruct student technologists, residents and physicians in nuclear medicine techniques;

• Accurately bill procedures performed in nuclear medicine;

• Keep informed on new technical developments;

• Maintain inventory of supplies;

• Maintain compliance with radiation safety policies and documentation that keep the radiation

dose to workers and patients as low as reasonably achievable;

• Perform other related duties as required.

Successful completion of a recognized Nuclear Medicine training program, and certification by the

State of California to practice as a Nuclear Medicine Technologist (CNMT) upon appointment.

Possession of current certification in CPR and Basic Life Support.

Sufficient education, training and experience to demonstrate possession of the knowledge and

abilities listed below.

• Principles, procedures, practices, terminology and equipment used throughout the Nuclear

Medicine Department;

• Proper safety precautions and procedures involved in handling specimens, equipment,

instruments and supplies used in nuclear medicine;

• General knowledge of radioactive materials commonly used in a hospital;

• Precautions necessary for patient and staff in use of radioactive materials;

• Phlebotomy techniques;

• Basic computer skills.

• Perform standard diagnostic procedures using radioactive isotopes and to prepare reports

resulting from such procedures;

• Comprehend and carry out technical medical instructions with accuracy;

• Perform required sequences of exacting procedures with accuracy and necessary speed;

• Use and adjust radiation counting equipment;

• Accurately measure extremely small quantities of liquid samples;

• Withdraw blood samples from patients and perform required tests;

• Keep accurate records of receipt, use and disposal of all radioactive materials;

• Troubleshoot, document, and communicate equipment problems;

• Calibrate instruments and equipment;

• Deal with patients sympathetically and tactfully.

Friday, September 11, 2009

ITL Security Bulletins

Thursday, September 10, 2009

Future Trends in Ship Size

Although the ship may be technologically feasible, there must be a level of trade sufficient to support such a vessel. Of equal or greater impor- tance, there must be shoreside facilities to match its capacity. The major problem is the need to minimize port time (There is a truism that a transportation asset, whether ship, aircraft, rail car, or truck must be in motion to assure its economic survival) In addition, and of great importance, the harbor waters, berths, and approach channels must be of sufficient depth and the berths themselves must be large enough and properly equipped to handle the larger (longer, wider, and deeper) vessel.

In the case of this mega-container ship, the terminal must have sufficient area to accommodate the larger number of boxes that will accumulate before the ship arrives and as she is being discharged and loaded; crane capacity (in terms of both the number of cranes and their cycle time) must be sufficient to minimize port stays; and, needless to say, the requirements for sufficient water depth and appropriate vessel berths must be considered.

We believe that we have not seen the practicable upper limit of container ship size in the 7000-TEU plus vessels now in existence. An eventual ceiling might be found around the 10,000 to 12,000 TEU level. Market forces will continue to influence the evolution of the system as long as it moves in a way that continues to provide improvements in cost, reliability, and speed and customer satisfaction.

Wednesday, September 09, 2009

NASA Launches New Technology: An Inflatable Heat Shield

The Inflatable Re-entry Vehicle Experiment, or IRVE, was vacuum-packed into a 15-inch diameter payload "shroud" and launched on a small sounding rocket from NASA's Wallops Flight Facility on Wallops Island, Va., at 8:52 a.m. EDT. The 10-foot diameter heat shield, made of several layers of silicone-coated industrial fabric, inflated with nitrogen to a mushroom shape in space several minutes after liftoff.

The Black Brant 9 rocket took approximately four minutes to lift the experiment to an altitude of 131 miles. Less than a minute later it was released from its cover and started inflating on schedule at 124 miles up. The inflation of the shield took less than 90 seconds.

"Our inflation system, which is essentially a glorified scuba tank, worked flawlessly and so did the flexible aeroshell," said Neil Cheatwood, IRVE principal investigator and chief scientist for the Hypersonics Project at NASA's Langley Research Center in Hampton, Va. "We're really excited today because this is the first time anyone has successfully flown an inflatable reentry vehicle."

According to the cameras and sensors on board, the heat shield expanded to its full size and went into a high-speed free fall. The key focus of the research came about six and a half minutes into the flight, at an altitude of about 50 miles, when the aeroshell re-entered Earth's atmosphere and experienced its peak heating and pressure measurements for a period of about 30 seconds.

An on board telemetry system captured data from instruments during the test and broadcast the information to engineers on the ground in real time. The technology demonstrator splashed down and sank in the Atlantic Ocean about 90 miles east of Virginia's Wallops Island.

"This was a small-scale demonstrator," said Mary Beth Wusk, IRVE project manager, based at Langley. "Now that we've proven the concept, we'd like to build more advanced aeroshells capable of handling higher heat rates."

Inflatable heat shields hold promise for future planetary missions, according to researchers. To land more mass on Mars at higher surface elevations, for instance, mission planners need to maximize the drag area of the entry system. The larger the diameter of the aeroshell, the bigger the payload can be.

The Inflatable Re-entry Vehicle Experiment is an example of how NASA is using its aeronautics expertise to support the development of future spacecraft. The Fundamental Aeronautics Program within NASA's Aeronautics Research Mission Directorate in Washington funded the flight experiment as part of its hypersonic research effort.

Tuesday, September 08, 2009

The Mega-Container Ship

Monday, September 07, 2009

Technology Literacy Challenge Fund

The fund would provide states with maximum flexibility. To receive funds, states would have to meet only these basic objectives:

- Each state would develop a strategy for enabling every school in the state to meet the four technology goals. These state strategies would address the needs of all schools, from the suburbs to the inner cities to rural areas. Strategies would include benchmarks and timetables for accomplishing the four goals, but these measures would be set by each state, not by the federal government.

- State strategies would include significant private-sector participation and commitments, matching at least the amount of federal support. Commitments could be met by volunteer services, cost reductions, and discounts for connections under the expanded Universal Service Fund provisions of the Telecommunications Act of 1996, among other ways.

- To ensure accountability, each state not only would have to set benchmarks, but also would be required to report publicly at the end of every school year the progress made in achieving its benchmarks, as well as how it would achieve the ultimate objectives of its strategies in the most cost-effective manner.

Friday, September 04, 2009

About CAUBE

CAUBE.AU was formed to represent the views of those in Australia who are opposed to the advertising practices which are collectively known as email spam. Consumers are increasingly hostile to spam, whether it comes from unknown or known senders. CAUBE.AU is an all-volunteer grassroots organisation dedicated to eliminating spam from electronic mail boxes, with a focus on Australia's role in preventing spam. We pursue these aims through community education programs, by providing this site, which is a central repository for information on spam in Australia, and by providing advice to the government on how best to deal with the spam problem.

We have been involved in numerous activities that have improved the regulatory situation in Australia, including activities leading up to the enactment of the Spam Act 2003, consultations on the best practice model for electronic commerce, Parliamentary committee inquiries, and industry code development.

Recognising that the trends in spam volumes clearly demonstrate that spam is a real danger to the value of electronic mail, the Australian Government and the Australian Parliament acted to ban most spam, passing the Spam Bill 2003 in December 2003. This reflects the reality that by its nature, the spam problem cannot be solved without legislation designed to deal with spam. The resulting Spam Act 2003 (Cth) outlaws the vast majority of spam

While we regard legislation as necessary for consumer protection, we also value the role of education. While education cannot address the problem of unrepentant spammers who would happily destroy the Internet if it would earn them a few bucks in change, there are those who spam simply because they are not aware of the destructive nature of that method of advertising. We believe that many of these people, when given a reasonable and balanced representation of the facts, will agree that spam is an inexcusably unethical method of promoting their products.

Thursday, September 03, 2009

Monitoring the Work Place

Generally, monitoring of staff's use of email, the internet and other computer resources, where staff have been advised about that monitoring, would be allowed. The Office has issued advisory guidelines on Workplace Email, Web Browsing and Privacy. These recommend steps that organisations can take to ensure that their staff understand the organisation's position on this issue through the development of clear policies.

There are some other laws that deal specifically with the use of surveillance or listening devices. For example the Telecommunications (Interception and Access) Act 1979 (Cth) relates to rules around intercepting telephone communications.

Wednesday, September 02, 2009

NIST Study Finds a Decade of High-Payoff, High-Throughput Research

In its first decade of work, a research effort at the National Institute of Standards and Technology (NIST) to develop novel and improved "combinatorial" techniques for polymer research—an effort that became the NIST Combinatorial Methods Center (NCMC)—realized economic benefits of at least $8.55 for every dollar invested by NIST and its industry partners, according to a new economic analysis.

The new study,* conducted for NIST by RTI International, estimates that from inception (1998) through 2007, the investment in the NCMC has yielded a social rate of return of about 161 percent (also known as the "internal rate of return" in corporate finance; a minimum acceptable IRR for a government research project is about 50 percent). RTI’s evaluation also found that the NCMC accelerated industry’s adoption of combinatorial methods by an average of 2.3 years.

RTI surveyed and interviewed polymer scientists at NCMC member institutions, as well as from the much larger body of research universities and chemical companies who benefited from the center’s research and outreach. The study found that the NCMC has had a significant impact on the development and use of combinatorial research methods for polymers and other organic materials, both in the development of novel techniques and data and the diffusion of research results to the larger polymers research community.

Started as a NIST pilot project in 1998 and formally established in 2002, the NCMC was conceived as a community effort—supported by NIST and industry membership fees—to develop methods for discovering and optimizing complex materials such as multicomponent polymer coatings and films, adhesives, personal care products and structural plastics. Indeed, the RTI report lauded the novel "open source" consortium model that NIST developed for the center as a main reason for its success and impact. The program has since branched out into nanostructured materials, organic electronics, and biomaterials.

The big idea behind combinatorial and high-throughput research is to replace traditional, piecemeal approaches to testing new compounds with methods that can synthesize and test large numbers of possible combinations simultaneously and systematically. NIST in particular pioneered the idea of "continuous gradient libraries," polymer test specimens whose properties change gradually and regularly from one side to the other so that the behavior of a huge number of possible mixtures can be evaluated at the same time. (For example, see http://www.nist.gov/public_affairs/techbeat/tb2006_0608.htm#designer "Designer Gradients Speed Surface Science Experiments," NIST Tech Beat, June 8, 2006, and http://www.nist.gov/public_affairs/techbeat/tb2007_0510.htm#wet "Wetter Report: New Approach to Testing Surface Adhesion," NIST Tech Beat, May 10, 2007.)

The NCMC has contributed advances in three major platform infratechnologies for creating combinatorial "libraries" for materials research—gradient thin films, and discrete libraries created by robotic dispensing systems and microfluidics systems—as well as several new high-throughput measurement methods and information technologies to manage the vast amount of data produced by combinatorial analysis. The center's research program is matched with an outreach effort to disseminate research results through open workshops and training programs.

The RTI report, Retrospective Economic Impact Assessment of the NIST Combinatorial Methods Center, is available online at www.nist.gov/director/prog-ofc/report09-1.pdf. The Web home page of the NIST Combinatorial Methods Center is at http://www.nist.gov/msel/polymers/combi.cfm.

Tuesday, September 01, 2009

NIST Scientists Study How to Stack the Deck for Organic Solar Power

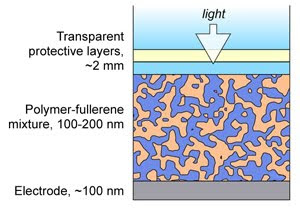

Organic photovoltaics, which rely on organic molecules to capture sunlight and convert it into electricity, are a hot research area because in principle they have significant advantages over traditional rigid silicon cells. Organic photovoltaics start out as a kind of ink that can be applied to flexible surfaces to create solar cell modules that can be spread over large areas as easily as unrolling a carpet. They’d be much cheaper to make and easier to adapt to a wide variety of power applications, but their market share will be limited until the technology improves. Even the best organic photovoltaics convert less than 6 percent of light into electricity and last only a few thousand hours. “The industry believes that if these cells can exceed 10 percent efficiency and 10,000 hours of life, technology adoption will really accelerate,” says NIST’s David Germack. “But to improve them, there is critical need to identify what’s happening in the material, and at this point, we’re only at the beginning.”

The NIST team has advanced that understanding with their latest effort, which provides a powerful new measurement strategy for organic photovoltaics that reveals ways to control how they form. In the most common class of organic photovoltaics, the “ink” is a blend of a polymer that absorbs sunlight, enabling it to give up its electrons, and ball-shaped carbon molecules called fullerenes that collect electrons. When the ink is applied to a surface, the blend hardens into a film that contains a haphazard network of polymers intermixed with fullerene channels. In conventional devices, the polymer network should ideally all reach the bottom of the film while the fullerene channels should ideally all reach the top, so that electricity can flow in the correct direction out of the device. However, if barriers of fullerenes form between the polymers and the bottom edge of the film, the cell’s efficiency will be reduced.

By applying X-ray absorption measurements to the film interfaces, the team discovered that by changing the nature of the electrode surface, it will repulse fullerenes (like oil repulses water) while attracting the polymer. The electrical properties of the interface also change dramatically. The resultant structure gives the light-generated photocurrent more opportunities to reach the proper electrodes and reduces the accumulation of fullerenes at the film bottom, both of which could improve the photovoltaic’s efficiency or lifetime.

“We’ve identified some key parameters needed to optimize what happens at both edges of the film, which means the industry will have a strategy to optimize the cell’s overall performance,” Germack says. “Right now, we’re building on what we’ve learned about the edges to identify what happens throughout the film. This knowledge is really important to help industry figure out how organic cells perform and age so that their life spans will be extended.”